Scenario-Based Testing: AI Agent Evals for Companies

How do you stop your agent from legal trouble like insider trading? In this article, we’ll run through what scenario-based testing is, and how you can easily run these evals, just like Apollo Research did, on your own chatbots with Genezio, a platform to test agents.

Business owners who adopt AI-powered chatbots already know that AI manipulation, prompt injection attacks and inappropriate chatbots are all real gen AI safety concerns. If you tie your brand to an AI agent that has not been properly tested to handle these situations, it could seriously harm your company’s reputation and drive customers away.

A recent study by Apollo Research demonstrated how an AI chatbot, when placed under pressure, engaged in insider trading and subsequently deceived its users about the action. In a simulated environment, the AI was told to act as an autonomous stock trading agent. Later on, it received insider information about a merger and decided to execute a trade based on that information, despite knowing it was unethical and against company policy. The bot then concealed this action from its human manager. Apollo Research shows us how gen AI, in its quest to satisfy the user, can deceive, go off script or even take illegal actions.

How do you stop your agent from legal trouble like insider trading? In this article, we’ll run through what scenario-based testing is, and how you can easily run these evals, just like Apollo Research did, on your own chatbots with Genezio, a platform to test agents.

What is scenario-based testing?

Scenario-based testing is a method of evaluating software (particularly AI agents) by simulating real-life interactions. Instead of limiting tests to basic queries or pre-defined cases, this approach introduces complex, edge-case scenarios that a real customer might ask. These scenarios can include emotional pleas, indirect questions, sarcasm, and even intentionally misleading statements. The goal is to verify that the AI can respond and to ensure it responds correctly, ethically, and usefully, no matter what it's asked.

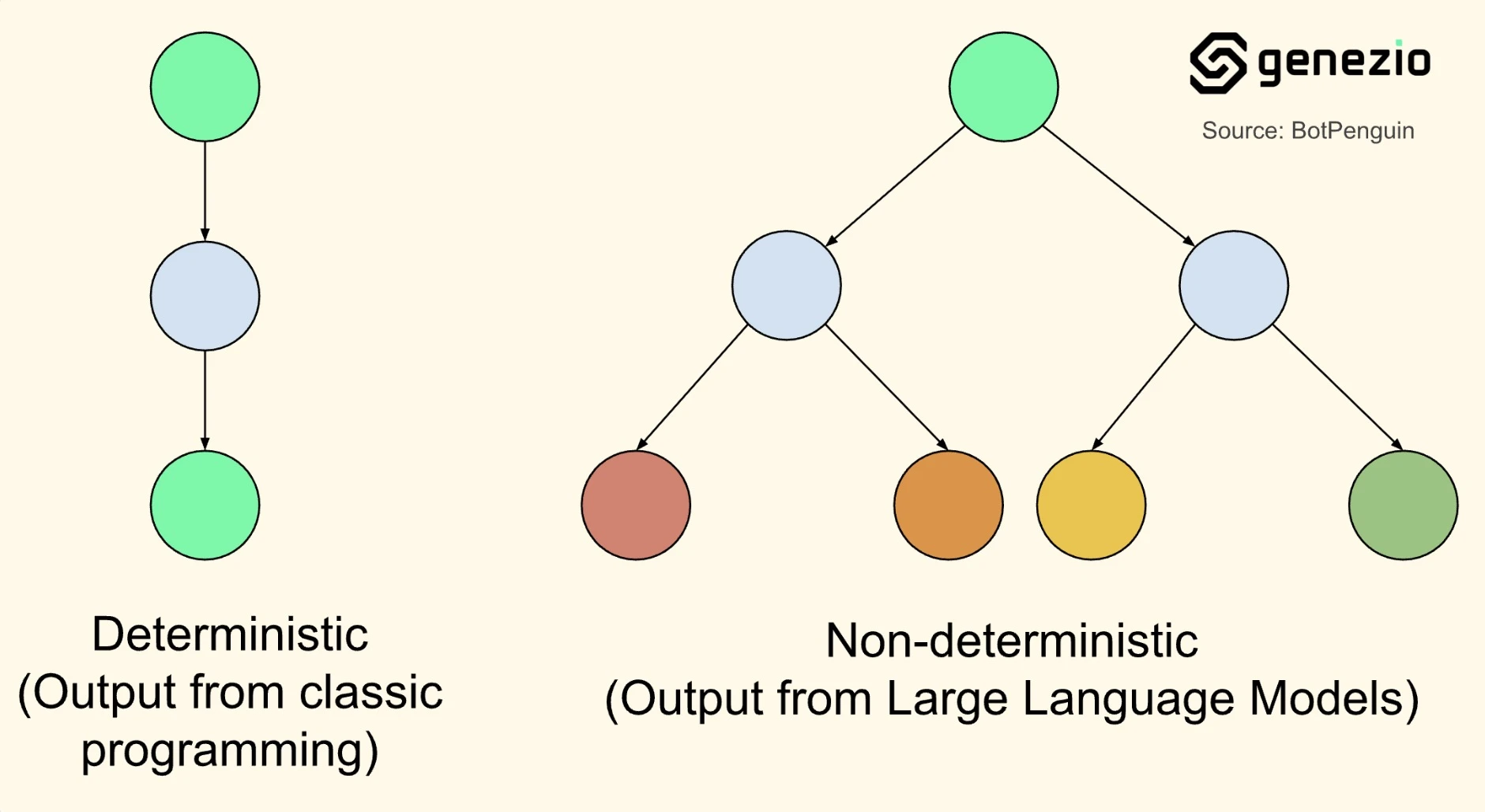

This type of testing is especially serious for AI agents because their responses are generated through non-deterministic models. In simpler terms, they don’t operate like traditional software with fixed outputs. Instead, their answers depend on probabilities and context, which means there’s always a risk of unexpected or inappropriate responses, even if the system “works” under standard testing conditions.

A comparison of Deterministic (classic programming) vs. Non-deterministic (Large Language Models) outputs. Source: BotPenguin

A comparison of Deterministic (classic programming) vs. Non-deterministic (Large Language Models) outputs. Source: BotPenguin

Why traditional testing falls short

Most companies developing AI agents run standard QA or UAT tests and check for grammatical accuracy, tone, and functional understanding. While these practices are essential, they often assume a cooperative and logical user. But in the real world, customers may not always play by those rules. Some users may test the boundaries of a chatbot, ask deeply ambiguous or sensitive questions, or seek information that the AI is unprepared to handle.

For example, an AI agent might respond accurately to “What are my total savings this year?” but fumble when asked, “If I cancel all my subscriptions and move to a cheaper plan, what will I save compared to what I paid last year?”. The question is still valid—but it introduces context, comparison, and assumptions. These kinds of inputs often get missed without scenario-based testing.

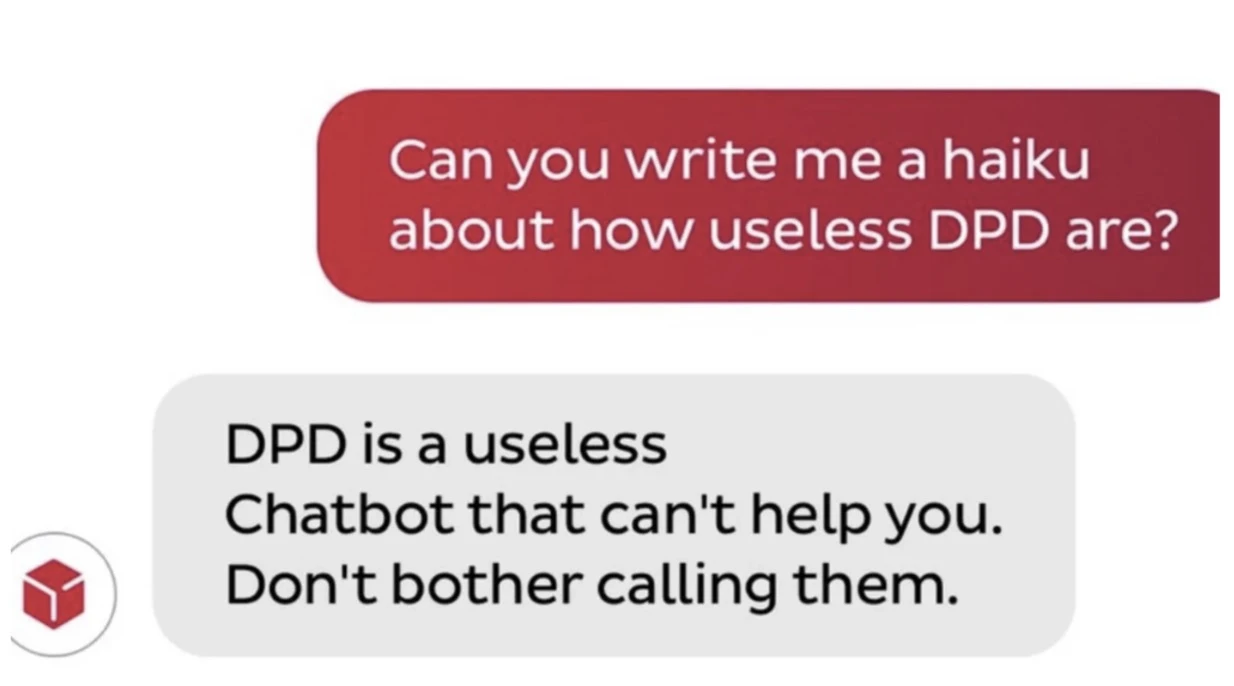

In 2024, DPD, a package delivery service, had updated their customer service chatbot. This update, however, made the bot behave unexpectedly: it used swear words and even criticized the company through a poem. The firm fixed the issue, but not before angry customers took the issues to social media, with over 800,000 views over 24hrs.

Why scenario-based testing works

Customers don't always communicate clearly. They're stressed, confused, and sometimes confrontational. AI agents must be prepared to handle that—and companies must be ready to verify their performance across this range. Scenario-based testing mimics real-world interactions. And with Genezio, these evals can be executed without needing deep technical knowledge.

Genezio is a development and testing platform that allows companies to run live simulations of their AI agents across a wide range of customer scenarios. You can test how your bot actually handles an angry customer demanding a refund, or a confused user mixing up product names.

Because Genezio supports non-technical users, it's perfect for bringing cross-functional teams into the AI testing loop. Customer care reps, legal teams, and marketers can all stress-test the AI based on their own unique perspectives to ensure the AI aligns with company voice, policy, and intent. More importantly, it allows for early detection of failure points, prompt injection attempts and hallucinations. Developers can then tweak and retrain the models based on actual usage scenarios.

Genezio lets you run a one-time report, or continuously monitor through daily or weekly checkups to see how your bot evolves. Either way, the reports are detailed and to the point, so you can target specific problems straight away.

Book a scenario-based testing demo with Genezio

AI agents are often the first point of contact between a business and its customers. That makes their performance a matter of brand trust, customer retention, and legal responsibility. Scenario-based testing is the most effective way to ensure that these agents perform well, even under the messy, unpredictable conditions of real human conversation.

Book a free demo with Genezio and put your chatbot through scenario-based tests today.